From the Laptop

Beyond the Hype: Practical AI Agents for Business Automation on GCP

July 2nd 2025 by

Kee

Introduction: Cutting Through the AI Noise

Hey everyone! 👋

If you're like me, your inbox and news feeds are overflowing with talk about

AI. "Gen AI this," "Large Language Models that"—it's thrilling, yes, but it can

feel a bit... confusing, right? The real question for businesses isn't just "What

can AI do?" but "How can AI actually help my business right now, in the real world,

to be more efficient and productive?"

That's precisely what I want to talk about: AI Agents. These aren't just theoretical

builds; they're the next frontier in business automation, and with the power of Google

Cloud Platform (GCP), we can turn them into tangible, efficiency-boosting assets for

your organization.

Its really not science fiction! Just hear me out. Let's dive into how intelligent

assistants can revolutionize your operations and deliver measurable results.

What Exactly Are "AI Agents" in a Business Context?

Think a highly specialized, autonomous software "robot" designed to achieve

specific goals. Unlike traditional automation, which follows rigid rules, AI

agents can:

- Perceive: Understand their environment by processing data (text, numbers,

images, real-time feeds).

- Reason: Make intelligent decisions based on their understanding and

predefined objectives.

- Act: Take specific actions, interact with other systems (APIs, databases,

software tools), and even communicate with humans.

- Learn: Improve over time as they process more data and execute more tasks.

Not just executing a single command; orchestrating multiple steps to complete a complex task,

often with minimal human intervention.

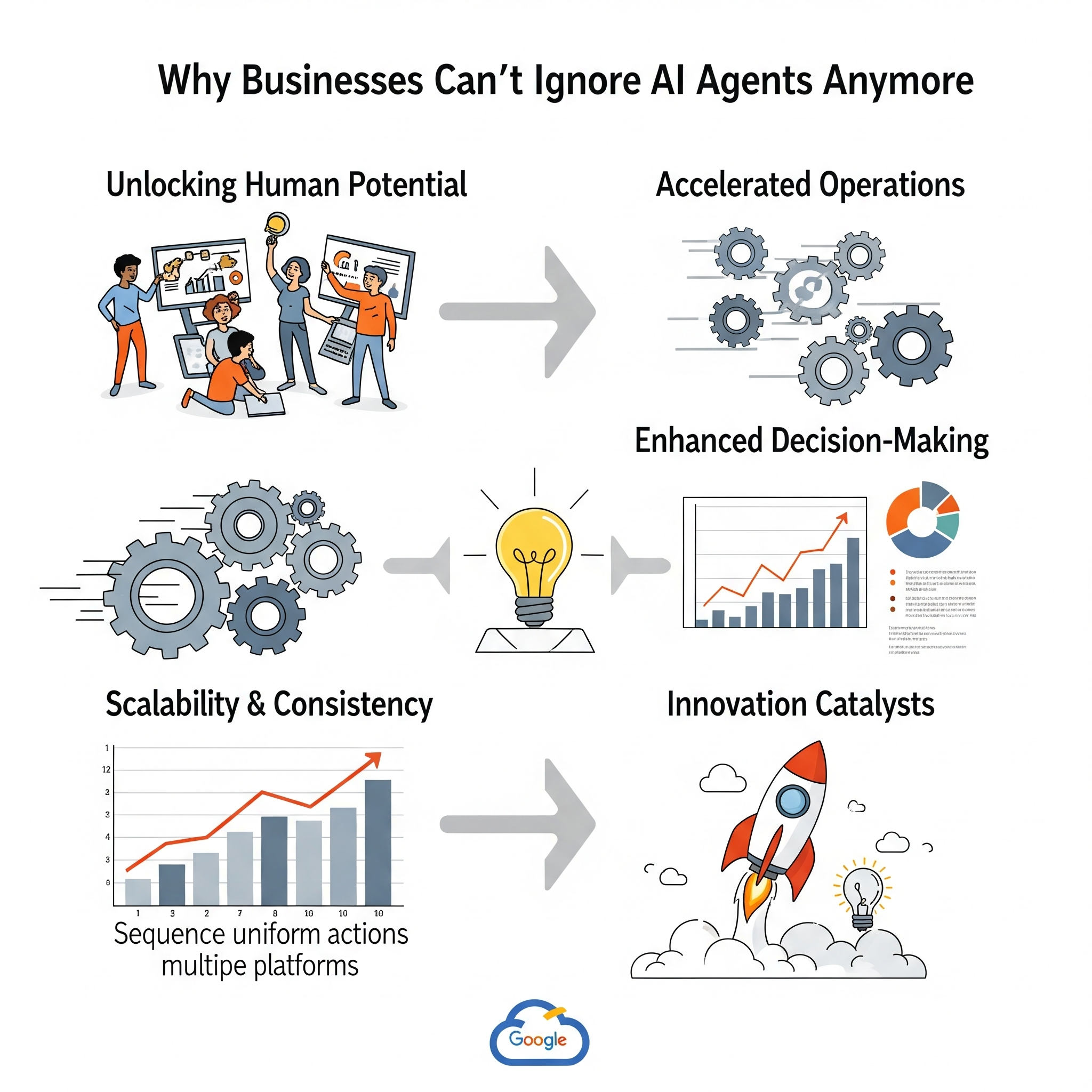

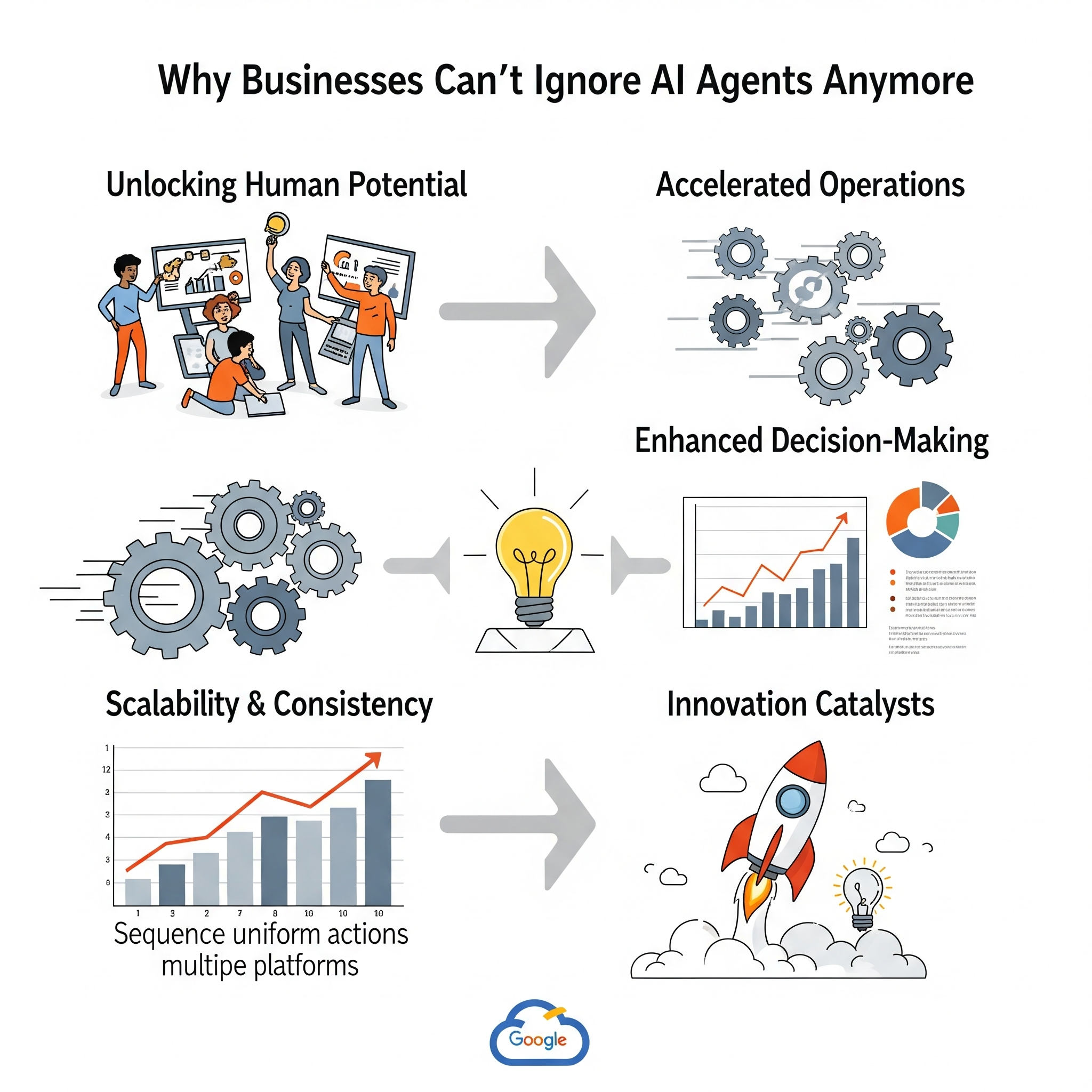

Why Businesses Can't Ignore AI Agents Anymore

The potential for real-world efficiency gains from AI agents is truly exciting. Imagine tasks

that currently consume hours of human time being handled autonomously, 24/7. This isn't just

about cost savings (though that's a huge part of it!); it's about:

- Unlocking Human Potential: Freeing your team from repetitive, tedious

tasks so they can focus on strategic thinking, creativity, and high-value problem-solving.

- Accelerated Operations: Speeding up workflows dramatically, from data

processing to customer service responses.

- Enhanced Decision-Making: Providing real-time insights and recommendations

by continuously analyzing vast datasets, leading to smarter, faster decisions.

- Scalability & Consistency: Handling increased workloads seamlessly and

performing tasks with consistent accuracy every single time.

- Innovation Catalysts: Automating foundational work allows your teams to

innovate faster and explore new opportunities.

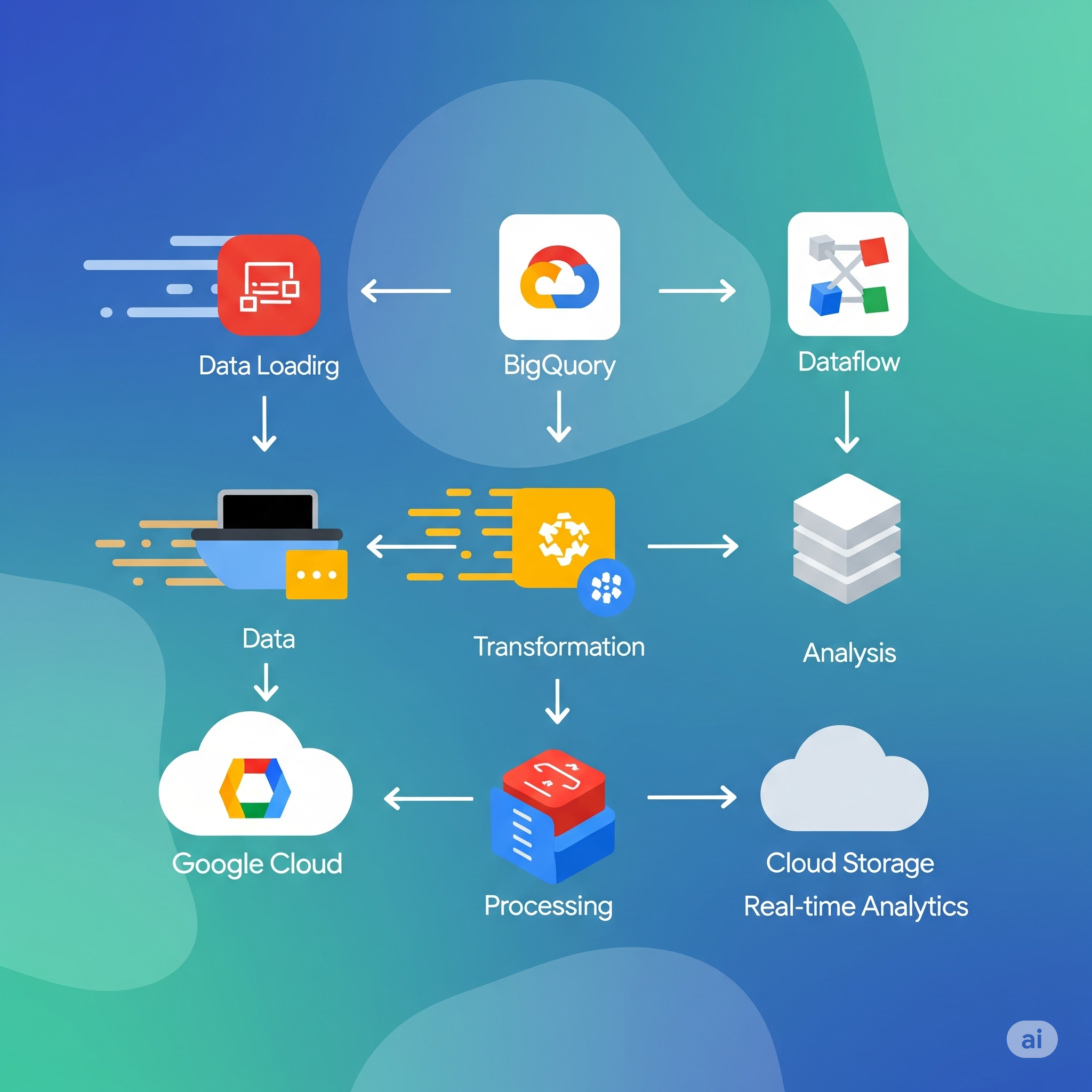

Bringing AI Agents to Life on Google Cloud Platform (GCP)

Google Cloud offers a robust, scalable, and secure environment perfect for building, deploying,

and managing sophisticated AI agents with a splash of quick start magic. You don't need to start

from scratch with some key GCP components that make it possible:

- Vertex AI Agent Builder: It's Google Cloud's integrated platform designed

to help you build and orchestrate enterprise-grade multi-agent experiences. It simplifies the

process of connecting agents to your enterprise data and tools, and deploying them to a fully

managed runtime. The command center for agent development.

- Large Language Models (LLMs) via Vertex AI (e.g., Gemini, Gemma): These

powerful models act as the "brain" of your AI agents, enabling them to understand natural

language, reason, generate content, and interact intelligently.

- Cloud Functions & Cloud Run: For hosting the agent's logic and tools as

serverless components, allowing for flexible, scalable, and cost-effective execution without

managing infrastructure.

- Google Cloud Pub/Sub: Essential for building multi-agent systems, acting as

a highly scalable messaging service that allows different agents to communicate and coordinate

seamlessly.

- BigQuery & Cloud Storage: For robust data storage and analytics, grounding

your agents with the necessary information to perform their tasks effectively.

- APIs & Integrations: Allowing your AI agents to interact with your existing

business applications and external services, truly embedding them into your workflows.

Real-World Impact: Where AI Agents Shine

Practical Application. Imagine AI agents automating processes like:

- Customer Service Triage: An agent instantly analyzes incoming customer inquiries,

resolves common issues autonomously, and intelligently routes complex cases to the right human agent,

complete with a summarized history. (Think reduced wait times and happier customers!)

- Automated Document Processing: Agents extract key information from invoices,

contracts, or legal documents, validate it against databases, and initiate workflows like payment

processing or compliance checks—all in minutes. (Massive time savings and fewer errors!)

- Proactive System Monitoring: An agent continuously monitors IT infrastructure,

identifies anomalies, diagnoses potential issues, and even initiates self-healing actions or alerts

the relevant team before a problem escalates. (Minimizing downtime and boosting reliability!)

- Personalized Marketing Campaigns: An agent analyzes customer behavior data,

identifies segmentation opportunities, drafts personalized marketing copy, and even schedules

campaigns across multiple channels. (Increased engagement and conversion rates!)

- Supply Chain Optimization: Agents monitor inventory levels, predict demand

fluctuations, and automatically trigger reorders or suggest optimal routing for logistics, reacting

to real-time conditions. (Reduced waste and improved delivery times!)

Ready to Go Beyond the Hype?

The era of applied AI agents is here, and Google Cloud provides the foundation to make it a reality

for your business. This isn't just about adopting new tech; it's about re imagining how work gets

done, empowering talent, and achieving unparalleled efficiency.

If you're curious about how AI agents can specifically transform your operations and deliver

real-world efficiency gains, let's connect! I'd love to explore the possibilities with you.

What are your thoughts on autonomous AI agents? Share in the comments below or contact me.

I'm always eager to hear your perspectives!

Alright, folks! Wednesday is here, and I'm buzzing with excitement to share what's

been happening this week. Seriously, its been alot!

Have an awesome week, everyone! Let's keep innovating!

#AIAgents

#GoogleCloud

#DigitalTransformation

#BusinessAutomation

#Efficiency

New Game Releases

July 2, 2025 by

Kee

July 2025*

- Mecha Break (PC, XSX) - July 1

- EA Sports College Football 26 (PC, XSX, PS5) - July 10

- PATAPON 1+2 Replay (PC, NS) - July 11

- Tony Hawk Pro Skater 3+4 (PC, PS5, PS4, XSX, XO, NS, NS2,) - July 11

- Donkey Kong Bananza (N2) - July 17

- Shadow Labyrinth (PS5, XSX, PC, NS, NS2) - July 18

- Wildgate (PC, XSX, PS5) - July 22

- Wheel World (PC, XSX, PS5) - July 23

- Killing floor 3 (PC, PS5, XSX) - July 24

- Super Mario Party Jamboree: Nintendo Switch 2 Edition (NS2) - July 24

- Wuchang: Fallen Feathers (PC, XSX, PS5) - July 24

- No Sleep For Kaname Date - From AI: The SOMNIUM FILES (NS) - July 25

- Grounded 2 (PC, XSX) - July 29

- Tales of the Shire (NS, PC, XSX, XO, PS4, PS5) - July 29

- Ninja Gaiden: Ragebound (NS, PC, XSX, XO, PS4, PS5) - July 29

- Code Violet (PS5) - July TBD

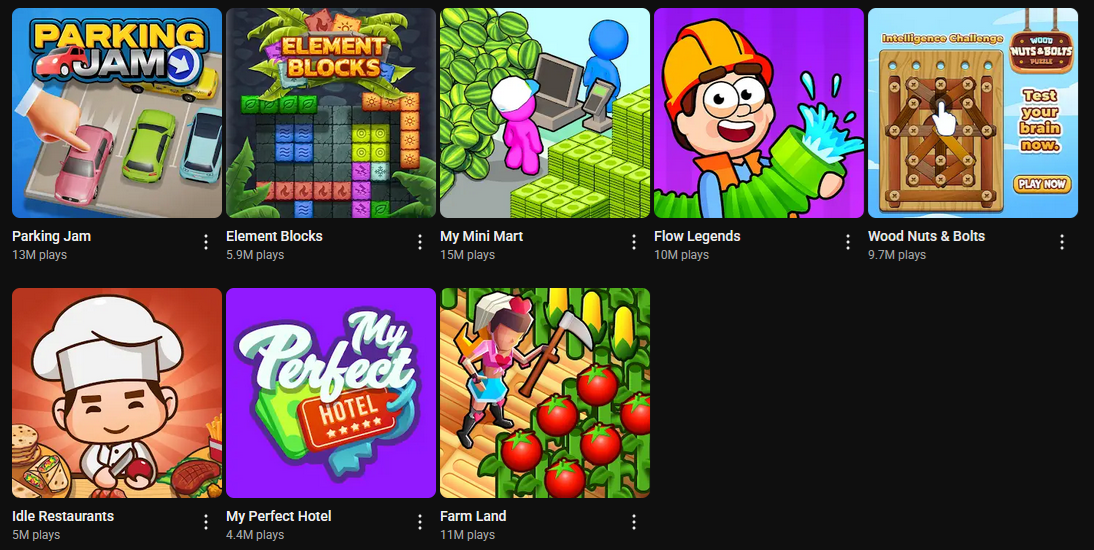

What I'm Playing and Obsessed With Now:

- Nothing... Minecraft is too tough and I'm to busy with the Google Cloud Projects

and Funding AI Projects that I'm to play the OG Call of Duty's. I am working on a

fun AI project for Minecraft but its big and is taking alot longer than I expected.

Its coming soon.

- Youtube Playables when waiting for processing there is one about Parking and

a Farm game I like but its this wood screw puzzle game that I can't stop playing.

Rewards and Challenges:

NONE, the state of gaming has me not interested until I can play Death stranding 2. I

watched streamers play it and I want to play it. I just don't know if I will enjoy it

as much as Death Standing. I saw the Red Dead Redemption Online Strange World Content

I want to try at some point.

#Games

#DeathStranding

#DeathStranding2

#Minecraft

#RedDeadRedemptionOnline

*SOURCES:

https://www.gamesradar.com/video-game-release-dates

Optimizing Game Server Performance with AI and Google Cloud: Lessons from the Trenches

July 2, 2025 by

Kee

In the fast-paced world of online gaming, a smooth and responsive experience is a necessity.

Lag, downtime, and performance hiccups can quickly alienate players and impact your game's

success. I've been in the trenches, leveraging the power of Artificial Intelligence (AI) and

Google Cloud Platform (GCP) to revolutionize game server management.

This post will dive deeper into how specific AI techniques and GCP services can lead to

ultra-efficient game server performance, drawing from our real-world experience.

The Power of AI in Game Server Management

Traditional game server management often involves manual adjustments and reactive responses.

AI, however, offers a proactive and intelligent approach, allowing for unprecedented levels of

optimization.

Predictive Scaling: Anticipating Player Demand

One of the most critical challenges in game server management is dynamically scaling resources

to match player demand. Spikes in concurrent users can overwhelm servers, leading to frustrating

lag. Conversely, over-provisioning resources during low-demand periods wastes money.

This is where predictive scaling with AI truly shines. Instead of simply reacting to current

player counts, AI models analyze historical data, player behavior patterns, and even external

factors like marketing campaigns or time of day. By leveraging machine learning algorithms, we

can accurately forecast future demand and automatically adjust server capacity before a surge

occurs. This ensures a seamless experience for players while optimizing infrastructure costs.

Anomaly Detection: Catching Issues Before They Impact Players

Imagine a subtle, creeping performance degradation that's hard to pinpoint manually. This is where

anomaly detection comes in. AI-powered anomaly detection continuously monitors various server

metrics—CPU usage, memory consumption, network latency, and more. It establishes a baseline of

"normal" behavior and then flags any deviations that fall outside of expected parameters.

This allows us to identify potential issues like resource leaks, unusual traffic patterns, or even

early signs of DDoS attacks, often before they escalate into game-breaking problems. By catching these

anomalies early, we can take corrective action proactively, minimizing downtime and maintaining a

high quality of service for players.

GCP Services: The Backbone of Intelligent Game Server Management

Google Cloud Platform provides a robust and flexible foundation for implementing these advanced

AI techniques. Here are some key services we utilize:

Google Kubernetes Engine (GKE): Orchestrating Scalable Game Servers

At the core of our infrastructure is Google Kubernetes Engine (GKE). GKE provides a managed

environment for deploying, managing, and scaling containerized applications. For game servers,

this is a game-changer. We can package our game servers into Docker containers, allowing for

rapid deployment and easy scaling.

GKE's auto-scaling capabilities, combined with our AI-driven predictive models, ensure that our

game server fleet can effortlessly expand and contract based on anticipated and real-time player

loads. This ensures high availability and responsiveness, even during peak gaming events.

Vertex AI: Powering Our Machine Learning Models

Vertex AI is Google Cloud's unified machine learning platform, and it's instrumental in building,

deploying, and managing our AI models for predictive scaling and anomaly detection.

With Vertex AI, we can:

- Train custom machine learning models: We feed in historical server performance

data, player metrics, and other relevant information to train models that accurately predict player

demand or identify anomalies.

- Manage datasets: Vertex AI provides tools for data labeling and preparation, which

is crucial for building high-quality AI models.

- Deploy models for real-time inference: Our trained models can be deployed as

endpoints, allowing our game server management system to query them in real-time for scaling

recommendations or anomaly alerts.

- Monitor model performance: We continuously monitor the accuracy and effectiveness

of our AI models, retraining them as needed to adapt to evolving player behavior and game updates.

Other Key GCP Services

Beyond GKE and Vertex AI, other GCP services play a vital role:

- Cloud Load Balancing: Distributes incoming player traffic across multiple game

server instances, ensuring optimal performance and high availability.

- Cloud Monitoring & Cloud Logging: Provide comprehensive insights into server

performance and application behavior, crucial for both training AI models and troubleshooting.

- Cloud Spanner/Firestore: Scalable, high-performance databases for storing

player data, game states, and other critical information.

The "Kee" Advantage: Real-World Impact

By combining these cutting-edge AI techniques with the robust capabilities of Google Cloud,

I have achieved significant improvements for clients:

- Reduced operational costs: By optimizing resource allocation, we minimize

unnecessary infrastructure spend.

- Enhanced player experience: Near-zero lag and downtime contribute to higher

player satisfaction and retention.

- Proactive issue resolution: Anomaly detection allows us to address potential

problems before they impact gameplay.

- Scalability for growth: Our systems are designed to effortlessly handle

massive influxes of players, supporting your game's growth trajectory.

In the competitive landscape of online gaming, staying ahead means embracing innovation. AI and

Google Cloud are not just buzzwords; they are powerful tools that, when used effectively, can

transform your game server performance and ultimately, the success of your game.

#GameServers

#GKE

#VertexAI

#AIOptimization

#CloudGaming

#LowLatency

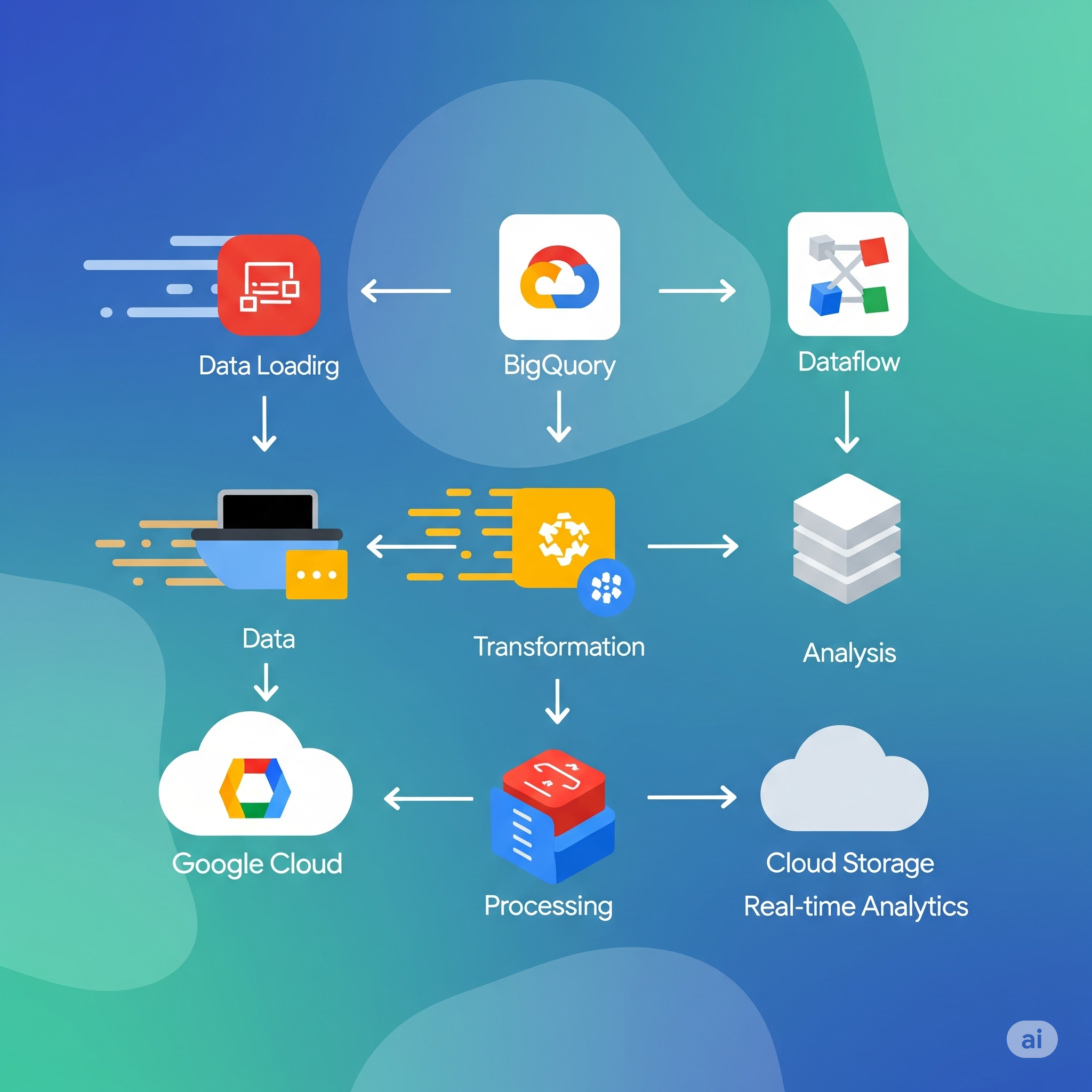

Zero-ETL on Google Cloud: Simplifying Data Integration for Real Time Insights

July 3, 2025

by

Kee

Let's keep it real: "ETL" (Extract, Transform, Load) sounds about as exciting as watching

paint dry. And for good reason! The traditional ETL process—moving data from one place,

cleaning it up, and then loading it somewhere else—has always been a bottleneck. It's been

clunky, time-consuming, and a major headache when you need real-time insights.

But what if I told you there's a better way? A way to get your data where it needs to be,

ready for analysis, almost instantly, without all that traditional complexity? Welcome to the

world of zero-ETL on Google Cloud.

Ditching the Old Way: What is Zero-ETL?

Imagine your data flowing effortlessly from its source directly into your analytics platform,

ready for immediate querying. No elaborate data pipelines to build and maintain, no hours spent

transforming messy datasets. That's the promise of zero-ETL.

It's not magic; it's a shift in how we think about data integration. Instead of rigid,

batch-oriented processes, zero-ETL leverages modern cloud architectures to enable continuous,

direct data access. This means your insights are always fresh, always relevant, and always

available when you need them most.

Google Cloud: Your Partner in Simplified Data Integration

Google Cloud Platform (GCP) is a powerhouse when it comes to making zero-ETL a reality. They've

built services that are designed to work together seamlessly, eliminating those traditional

integration headaches.

BigQuery: The Analytics Professional

At the heart of the zero-ETL experience on Google Cloud is BigQuery. This isn't just another data

warehouse; it's a serverless, highly scalable, and incredibly fast analytics engine. BigQuery's

superpower lies in its ability to handle massive datasets and execute complex queries in seconds.

The magic for zero-ETL? BigQuery can directly query data that resides in other GCP services

without needing to "load" it first. For instance:

- Direct Access to Cloud Storage: Have data sitting in Cloud Storage? BigQuery

can query it directly, no need to load it into BigQuery tables beforehand. This is perfect for

logs, raw event data, or large files that change frequently.

- BigQuery Omni: Want to query data living on AWS or Azure without moving it?

BigQuery Omni extends BigQuery's reach, allowing you to analyze data across clouds, reducing

egress costs and data movement.

Beyond BigQuery: Other Key Players

While BigQuery is central, other GCP services play crucial roles in enabling a truly zero-ETL

architecture:

- Change Data Capture (CDC) with Datastream: For operational databases like AlloyDB,

PostgreSQL, or MySQL, Datastream allows you to capture real-time changes and replicate them directly

into BigQuery or Cloud Storage. This means your analytics are always up-to-date with your operational

data, without complex batch jobs.

- Pub/Sub: Real-time Messaging: When you need to stream events or messages from various

sources, Pub/Sub acts as a reliable, scalable messaging service. Data can flow from Pub/Sub directly into

BigQuery or trigger other services for immediate processing.

- Dataflow: When Transformations Are Needed (but simpler):"zero-ETL" is about

minimizing traditional ETL. But sometimes, you still need to clean, aggregate, or enrich data on the fly.

Dataflow steps in here. It's a fully managed service for executing stream and batch processing pipelines.

It's designed for scale and simplifies complex transformations, making them far less burdensome than

traditional ETL tools. Think of it as "just-in-time" transformation, rather than a separate, laborious step.

- BigQuery ML: Once your data is in BigQuery, why move it again for machine learning?

BigQuery ML lets you build and train ML models directly within BigQuery using standard SQL. This cuts out

the ETL step required to move data to a separate ML platform, accelerating your path from data to predictive

insights.

The Real-World Impact: Faster Insights, Less Hassle

For businesses and developers, zero-ETL on Google Cloud means:

- Real-Time Decisions: Get insights as events happen, not hours or days later.

- Reduced Complexity: Less time building and maintaining brittle data pipelines.

- Lower Costs: Fewer moving parts mean less infrastructure to manage and optimize.

- Empowered Users: Data is more accessible, enabling more people to find the answers they need.

We're constantly pushing the boundaries of what's possible with data, and zero-ETL is a massive leap

forward. It's about getting out of the way of your data and letting it work for you.

Keep innovating, keep building, and let's push the boundaries of AI together!

#GCP

#ZeroETL

#RealTimeData

#DataIntegration

#BigQuery

#Dataflow

#GoogleCloud

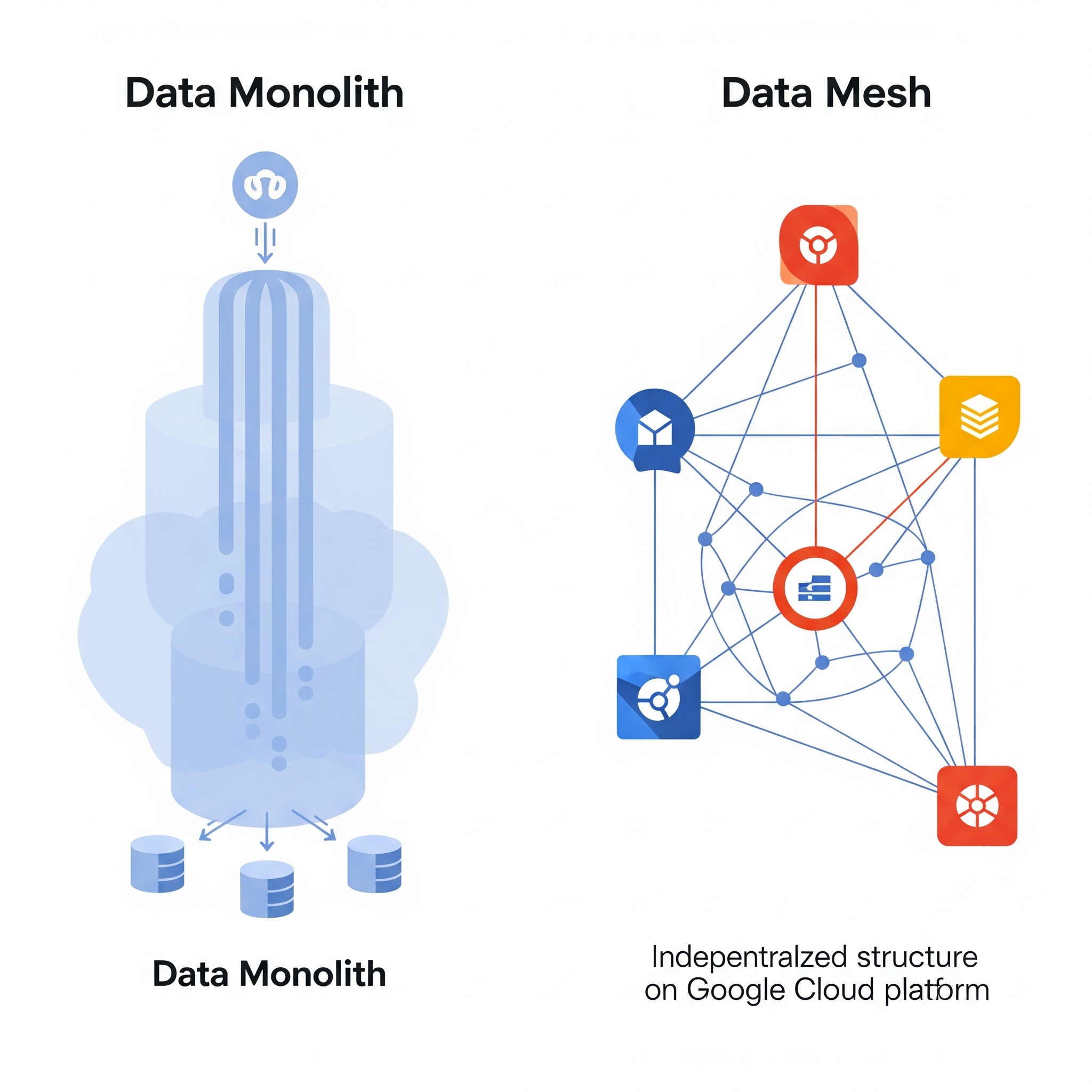

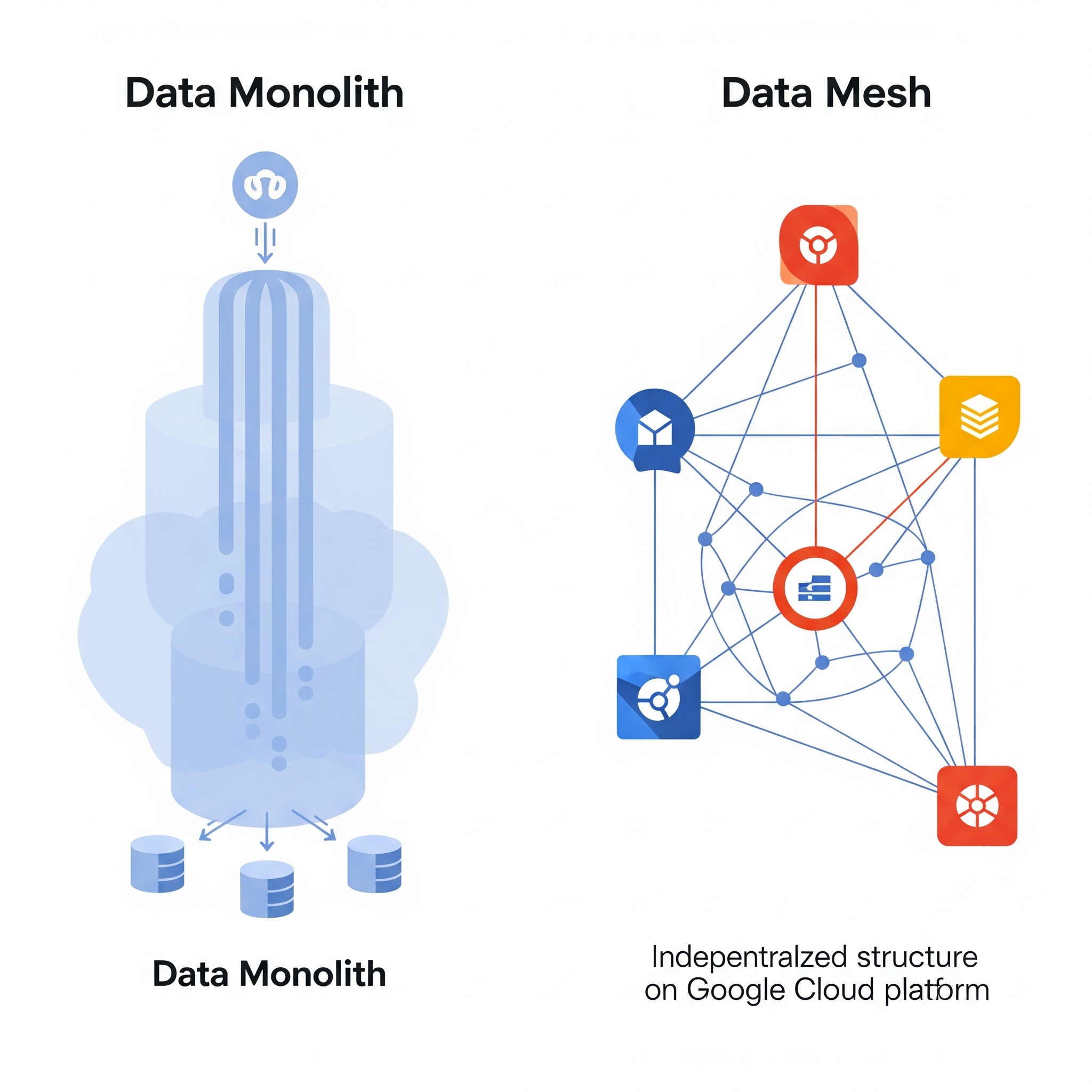

Demystifying Data Mesh on GCP: Building Decentralized Data Platforms

July 4, 2025 by

Kee

The world of data has gotten complicated. As organizations grow, so do their

data landscapes, often leading to a centralized data monolith a single,

sprawling data lake or warehouse managed by a bottlenecked central team. This

can slow down innovation, create ownership confusion, and ultimately hinder your

ability to extract real value from your data.

Enter the Data Mesh: a revolutionary architectural paradigm that's changing

how we think about data. Instead of a single, centralized data platform, Data

Mesh proposes a decentralized approach, treating data as a product owned by the

domain teams who best understand it. It's about empowering data producers and

consumers, fostering agility, and democratizing access to data.

But how do you actually build a Data Mesh? Especially on a powerful cloud

platform like Google Cloud? Let's demystify it.

What is Data Mesh, Anyway? The Core Principles

Before we dive into GCP services, let's quickly recap the four foundational

principles of Data Mesh:

- Domain-Oriented Decentralized Data Ownership and Architecture:

Data ownership shifts from a central team to the business domains that generate

and consume the data (e.g., Sales, Marketing, Product Development). Each domain

is responsible for its own data, treating it as a valuable product.

- Data as a Product: Data within each domain is treated as a

product, designed for usability, discoverability, addressability, trustworthiness,

and security. It has clear APIs and documentation, making it easy for other

domains to consume.

- Self-Serve Data Platform: A foundational platform team provides

tools, infrastructure, and automation that enable domain teams to build, deploy,

and manage their data products independently, without constant reliance on a

central team.

- Federated Computational Governance: Instead of rigid, centralized

governance, Data Mesh promotes a federated model where a small, cross-functional team

defines global rules and standards, while individual domains apply them within their

context.

Implementing Data Mesh on Google Cloud: Your Toolkit

Google Cloud Platform (GCP) is exceptionally well-suited for implementing a Data Mesh

architecture due to its extensive suite of managed, serverless, and highly scalable

services. Here's how various GCP services can become the building blocks of your

decentralized data platform:

1. Domain-Oriented Decentralized Data Ownership

- Google Cloud Projects & Folders: Naturally align with domain

boundaries. Each domain can have its own GCP project (or a set of projects within

a folder), providing clear resource isolation, access control, and billing

separation. This directly supports decentralized ownership.

- Identity and Access Management (IAM): Granular IAM policies

allow domain teams to control who has access to their data products and resources

within their projects, enforcing strict domain ownership.

2. Data as a Product

- BigQuery: This is your star player for serving data as a product.

- Shared Datasets: Domains can create datasets in BigQuery and share

them with specific groups or other projects, enabling controlled consumption.

- Authorized Views: To expose curated subsets of data or to enforce

row-level security without duplicating data, domains can create authorized views.

- BigQuery Data Masking: For sensitive data, BigQuery's data masking

capabilities allow domains to serve anonymized or pseudonymized versions of data,

adhering to privacy policies.

- Cloud Storage: For unstructured or semi-structured data products

(e.g., raw logs, images, backups), Cloud Storage buckets within domain-specific projects

become the storage layer, easily consumable by others.

- Data Catalog: Essential for making data products discoverable. Domains

can tag, describe, and classify their data assets in Data Catalog, making it easy for

consumers across the organization to find and understand available data products.

- Apigee / Cloud Endpoints: For more complex data products that require

custom APIs (e.g., real-time inference endpoints from ML models built on domain data), API

management solutions can expose data products securely.

3. Self-Serve Data Platform

- Google Kubernetes Engine (GKE) / Cloud Run: For building and

deploying custom data processing applications or data product APIs, GKE (for

container orchestration) and Cloud Run (for serverless containers) provide

scalable and flexible compute environments.

- Cloud Dataflow / Dataproc: For powerful data transformation and

processing pipelines within domains. Dataflow is fully managed and serverless,

perfect for stream and batch processing, while Dataproc offers managed Apache

Spark and Hadoop clusters for specific workloads. Domain teams can own and operate

these pipelines.

- Cloud Functions / Cloud Workflows: For event-driven automation

and orchestrating data product pipelines.

- Cloud Build / Cloud Deploy: For CI/CD pipelines to automate the

deployment of data products and underlying infrastructure, empowering domain teams

with self-service deployment capabilities.

- Terraform / Deployment Manager: Infrastructure as Code (IaC)

tools are crucial for enabling domain teams to provision and manage their own GCP

resources consistently and repeatedly.

4. Federated Computational Governance

- Shared VPC: While domains own their data, a Shared VPC network can provide a common

networking backbone, allowing secure and efficient communication between domain data products and shared

services, while maintaining individual project isolation.

- Policy Tags (Data Catalog): Used for fine-grained, automated governance. Policy Tags can

be applied to BigQuery columns and then linked to IAM roles, automatically enforcing access policies across

data products.

- Security Command Center: Provides centralized visibility into security posture and compliance

across all GCP projects, allowing a federated governance team to monitor adherence to global policies without

micromanaging individual domains.

- Cloud Monitoring & Cloud Logging: Essential for domains to monitor the health and performance

of their data products, and for a central governance team to have aggregated visibility if needed.

The Benefits of a Data Mesh on GCP

Implementing Data Mesh on Google Cloud isn't just about buzzwords; it delivers tangible benefits:

- Increased Agility: Domain teams can innovate faster without being bottlenecked by

a central data team.

- Enhanced Data Quality & Trust: Domains, being closest to the data, are best positioned

to ensure its quality and correctness.

- Improved Scalability: Leverage GCP's inherent scalability to handle ever-growing data volumes.

- Reduced Central IT Burden: The central data team can shift focus from operational tasks

to enabling and governing the platform.

- Faster Time to Insight: Data is readily available and understandable, accelerating analytics

and decision-making.

Data Mesh on GCP isn't a silver bullet, and it requires a significant cultural shift. However, by leveraging Google

Cloud's powerful, interconnected services, you can build a truly decentralized, domain-driven data platform that

empowers your organization to unlock the full potential of its data.

Ready to explore how a Data Mesh architecture on Google Cloud can transform your data strategy? Share your

projects and experiences! Let's learn and grow together.

#DataMesh

#DecentralizedData

#DataGovernance

#DataIntegration

#DomainDrivenDesign

#GoogleCloudArchitecture

Generative AI for Good: Ethical Considerations and Responsible Deployment on Google Cloud

July 5, 2025 by

Kee

Generative AI (GenAI) has captured the world's imagination, unlocking unprecedented possibilities in creativity, automation,

and problem-solving. From generating realistic images and compelling text to synthesizing code and designing new molecules,

its potential to transform industries and empower individuals is immense.

But with great power comes great responsibility. As we rapidly integrate GenAI into our applications and workflows,

critical ethical considerations come to the forefront. At Google Cloud, we believe that innovation must go hand-in-hand

with responsibility. This post will delve into the ethical landscape of GenAI and explore how Google Cloud's tools and

best practices are designed to support its responsible development and deployment.

The Ethical Imperatives of Generative AI

Generative AI presents unique ethical challenges that demand our proactive attention:

- Misinformation and Deepfakes: GenAI's ability to create highly realistic synthetic

content (images, audio, video, text) raises serious concerns about the spread of misinformation,

disinformation, and deceptive deepfakes. This can erode trust, manipulate public opinion, and even

impact democratic processes.

- Bias and Fairness: AI models learn from the data they're trained on. If this data

reflects existing societal biases (e.g., related to gender, race, or socioeconomic status), the GenAI

models can perpetuate and even amplify these biases, leading to unfair or discriminatory outputs in

areas like hiring, lending, or content moderation.

- Intellectual Property and Ownership: Who owns AI-generated content? When GenAI models

are trained on vast datasets, including copyrighted material, questions arise about content ownership,

unauthorized use, and fair compensation for original creators whose work contributed to the training data.

- Privacy and Data Security: The massive datasets required for training GenAI models can

contain sensitive personal information. Protecting this data from breaches and ensuring its ethical sourcing

and usage throughout the AI lifecycle is a constant challenge. Model inversion attacks, where training data

might be extracted from the model itself, are also a concern.

- Environmental Impact: The training and deployment of large GenAI models consume significant

computational resources, leading to substantial energy and water consumption. Responsible development also

includes considering the environmental footprint of these powerful technologies.

- Accountability and Explainability: When a GenAI model makes a "mistake" or generates

harmful content, who is accountable? The "black box" nature of some complex models makes it difficult to

understand why a particular output was generated, hindering accountability and trust.

Google Cloud's Commitment to Responsible AI

At Google, responsible AI is a foundational principle, guided by our AI Principles established

in 2018. These principles are a living constitution that informs our approach to developing and

deploying AI safely and ethically. On Google Cloud, we translate these principles into practical

tools and best practices, empowering our customers to build and deploy GenAI responsibly.

Tools and Best Practices for Responsible GenAI on GCP:

- Safety Filters and Controls (Vertex AI Generative AI):

- Built-in Safety Filters: Vertex AI's Generative AI offerings (like the Gemini API) come

with pre-trained safety filters designed to detect and block responses that fall into harmful categories

(e.g., hate speech, harassment, self-harm, sexual content).

- Customizable Safety Attributes: Customers can further customize these filters by defining

their own safety attributes and policies, allowing fine-grained control over content generation tailored to

specific use cases and compliance needs.

- Model Armor: This feature enhances the security and safety of AI applications by screening

foundation model prompts and responses for different security and safety risks.

- Bias Detection and Mitigation (Vertex AI):

- Model Evaluation on Vertex AI: Provides metrics to understand model performance and evaluate

potential bias using common data and model bias metrics. This helps identify and address biases during training

and over time.

- Fairness Metrics: Tools and methodologies to standardize fairness metrics for AI evaluation,

promoting diverse and representative datasets for training.

- Continuous Monitoring: Vertex AI Model Monitoring allows you to continuously monitor your

deployed models for data drift, concept drift, and performance degradation, which can be indicators of emerging

biases.

- Transparency and Explainability (Explainable AI):

- Explainable AI (XAI): Google Cloud offers XAI tools that help you understand why your models

make certain predictions or generate specific outputs. This increased transparency is crucial for debugging,

auditing, and building trust in your GenAI applications.

- Model Cards Toolkit: Encourages developers to create "Model Cards" documentation that provides

critical information about an AI model, including its purpose, training data, limitations, and ethical

considerations. This promotes transparency and responsible model governance.

- Privacy and Security by Design:

- VPC Service Controls: Establish secure perimeters around your data and AI services to prevent

data exfiltration and unauthorized access, even by compromised internal accounts.

- Customer-Managed Encryption Keys (CMEK): Gives you control over the encryption keys used for

your data at rest in Google Cloud services, adding an extra layer of security.

- Data Governance & Data Lineage: Tools like Data Catalog help you understand where your data

comes from, how it's transformed, and who has access to it, supporting data privacy compliance. Google Cloud

also offers data sovereignty options.

- Secure AI Framework (SAIF): Google's internal framework for building and deploying secure AI

systems, offering best practices and guidance for customers.

- Responsible Training Data and IP Considerations:

- Indemnification: Google Cloud offers IP indemnification for generative AI services, providing

customers with legal protection against certain intellectual property claims related to the training data.

- Customer Data Isolation: Google Cloud never uses your customer data to train our foundational

models, ensuring your proprietary information remains private.

Building a Culture of Responsible AI

Beyond tools, responsible GenAI deployment requires a cultural commitment. Google Cloud encourages:

- Human Oversight: Maintaining human involvement and review, especially for

high-risk applications, to catch unintended consequences.

- Clear Policies and Guidelines: Establishing internal guidelines for the ethical

use of GenAI within your organization.

- Continuous Learning and Evaluation: AI is rapidly evolving. Staying informed about

emerging risks and continuously evaluating your models for fairness, safety, and performance is essential.

- Collaboration: Engaging with stakeholders, including legal, ethics, and diverse

user groups, throughout the development lifecycle.

Generative AI: Innovation with Integrity

Generative AI holds immense promise for businesses across every sector. By leveraging Google Cloud's robust

Responsible AI tools, best practices, and principled approach, you can harness the power of GenAI to drive

innovation, improve efficiency, and create amazing experiences, all while ensuring your AI systems are fair,

safe, and trustworthy.

#GenerativeAI

#AIEthics

#ResponsibleAI

#DataPrivacy

#Compliance

#GoogleCloud

The Future of XR Data: How AI and Google Cloud are Powering Immersive Experiences

July 6, 2025 by

Kee

Remember when we talked about supercharging your XR pipelines? We touched on the massive

amounts of data generated and consumed by VR, AR, and Mixed Reality (XR) applications. But

the sheer volume is only half the story. The real magic happens when we intelligently process

and interpret that data to create truly dynamic, personalized, and ultimately, more immersive

experiences.

That's where Artificial Intelligence (AI) steps into the XR landscape, and Google Cloud

provides the powerful engine to drive this transformation. Let's explore how AI is

revolutionizing XR data and the essential GCP services making it all possible.

AI: The Intelligent Interpreter of XR Data

AI is no longer a futuristic concept; it's the key to unlocking the full potential of XR.

Here's how AI is actively processing and interpreting the rich data within immersive environments:

- Intelligent Scene Understanding and Semantic Segmentation: AI models, trained on

vast datasets of real-world and synthetic environments, can analyze the visual and spatial data captured

by XR devices. This enables applications to understand the content of a scene identifying objects,

people, and their relationships. Semantic segmentation goes a step further, labeling each pixel in the

environment with its corresponding object category. This understanding is crucial for realistic object

interactions, intelligent virtual assistants that can "see" their surroundings, and context-aware AR

overlays.

- Pose Estimation and Gesture Recognition: AI algorithms can track the precise movements

and poses of users within the XR environment, as well as recognize their gestures. This allows for natural

and intuitive interactions with virtual objects and interfaces, eliminating the need for cumbersome

controllers in many scenarios. Imagine manipulating virtual tools with your bare hands or communicating

with a virtual avatar through realistic body language.

- Gaze Tracking and Attention Analysis: By analyzing where a user is looking, AI can infer

their focus of attention and intent. This data can be used to dynamically adjust the level of detail in the

rendered environment (foveated rendering), personalize content based on user interest, and even gain valuable

insights into user behavior within the immersive experience.

- Natural Language Processing (NLP) and Conversational AI: Interacting with virtual agents

and environments through natural language is a cornerstone of immersive experiences. NLP allows users to

communicate using voice commands and text input, while conversational AI enables more engaging and realistic

dialogues with virtual characters.

- Personalized Experiences through Recommendation Engines: AI-powered recommendation engines

can analyze user behavior, preferences, and even real-time interactions within the XR environment to suggest

relevant content, experiences, or virtual companions. This personalization enhances user engagement and makes

immersive experiences more tailored to individual needs.

- Anomaly Detection for Enhanced Safety and Performance: AI can continuously monitor XR data

streams for anomalies, such as unexpected user behavior that might indicate discomfort or technical issues

affecting performance. This proactive detection can lead to safer and more reliable immersive experiences.

- Generative AI for Dynamic Content Creation: While still in its early stages, generative

AI is beginning to play a role in creating dynamic and diverse content within XR environments. Imagine AI

algorithms generating realistic textures, 3D models, or even entire virtual landscapes on demand, based on

user interactions or procedural rules.

Google Cloud: The Essential Engine for XR Data Intelligence

Processing and interpreting the massive and often real-time data streams from XR applications demands a

robust and scalable cloud infrastructure. Google Cloud Platform (GCP) provides a comprehensive suite of

services perfectly suited for powering AI-driven XR experiences:

- Compute Engine: Provides the foundational virtual machines with powerful CPUs and

GPUs necessary for training demanding AI models and running inference workloads for real-time data

processing.

- Vertex AI: Google Cloud's unified AI platform is a game-changer for XR development.

It offers end-to-end machine learning workflows, from data labeling and model training to deployment

and monitoring. Vertex AI simplifies the process of building and deploying custom AI models for scene

understanding, pose estimation, NLP, and more. Pre-trained models and AutoML capabilities further

accelerate development.

- AI Platform Data Labeling: Efficiently labeling the vast amounts of visual and

spatial data required for training AI models is crucial. This service provides a managed platform

for collaborative data labeling with human-in-the-loop capabilities.

- Cloud Storage: Offers scalable and durable object storage for housing the

massive datasets used for training AI models and the diverse content within XR applications.

- BigQuery: A serverless, highly scalable data warehouse for analyzing large XR

datasets, gaining insights into user behavior, and training more effective recommendation engines.

- TensorFlow and PyTorch on GCP: Google Cloud provides optimized environments for

popular deep learning frameworks like TensorFlow and PyTorch, accelerating the development and

training of complex AI models for XR.

- Cloud Vision API and Media Translation API: Pre-trained APIs for tasks like object

recognition, text detection, and language translation can be readily integrated into XR applications,

providing out-of-the-box AI capabilities for scene understanding and communication.

- Cloud Natural Language API and Dialogflow: Enable developers to easily integrate

NLP and conversational AI into their XR experiences, allowing for natural language interactions with

virtual agents and environments.

- Cloud Functions and Cloud Run: Serverless compute options for deploying lightweight

AI inference endpoints or event-driven data processing pipelines within your XR architecture.

- Game Servers on Google Cloud (GKE): For multi-user XR experiences, GKE provides a

scalable and reliable platform for deploying and managing game servers, ensuring low latency and smooth

synchronization crucial for immersive interactions.

The Future is Immersive and Intelligent

The convergence of XR and AI, powered by the scalable infrastructure and comprehensive AI toolkit

of Google Cloud, is paving the way for truly transformative immersive experiences. From more realistic

and interactive games and simulations to enhanced collaboration and training environments, the ability

to intelligently process and interpret XR data will unlock a new era of digital engagement.

By embracing AI on Google Cloud, developers and businesses can move beyond simply creating virtual and

augmented worlds to building intelligent, adaptive, and deeply personalized immersive experiences that

will shape the future of how we learn, work, play, and connect.

#VR

#XR

#AR

#ImmersiveExperiences

#AIDataProcessing

#SpatialComputing

#GoogleCloud